Speeding up an nginx webserver

(Updated: )

After properly securing my nginx webserver (vanwerkhoven.org), I tweaked the cache and connecting settings to improve performance as measured by www.webpagetest.org (webpagetest.org) which I documented below.

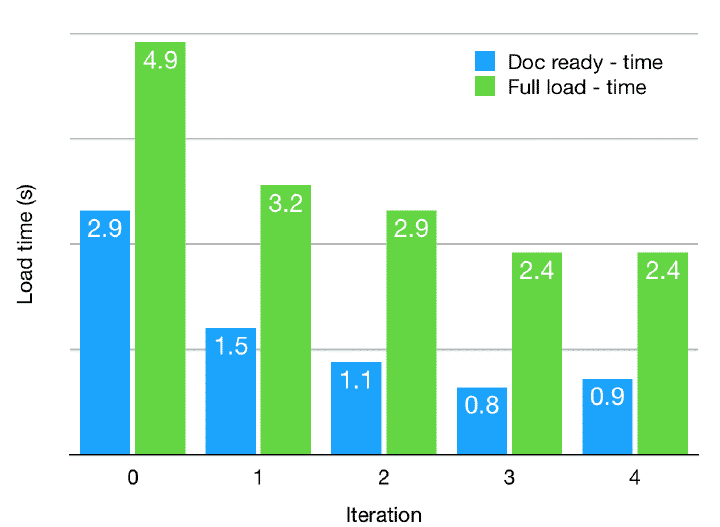

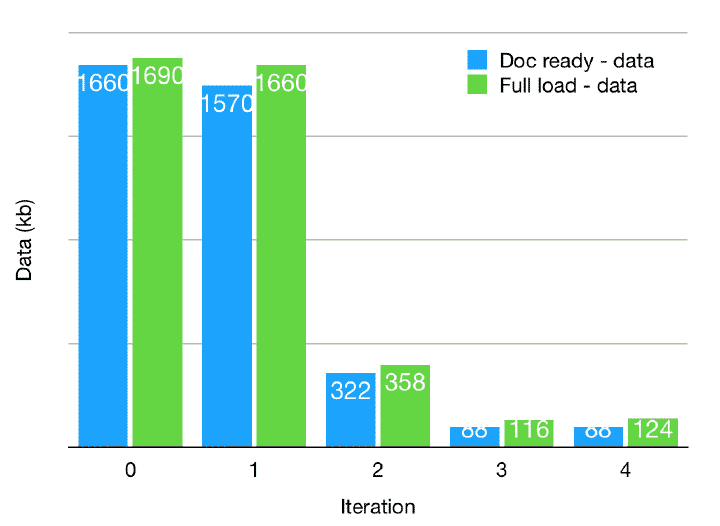

The results are as follows, I achieved a 3.2x faster document ready timing, and reduced bandwidth by 18x. Click on the iteration below to see the webpagetest.org results:

| iteration | change | Doc ready | Full load |

|---|---|---|---|

| 0 (webpagetest.org) | baseline | 2.9s | 13 reqs |

| 1 (webpagetest.org) | http2, combine files | 1.5s | 5 reqs |

| 2 (webpagetest.org) | gzip | 1.1s | 4 reqs |

| 3 (webpagetest.org) | compile js | 0.8s | 5 reqs |

| 4 (webpagetest.org) | cache-control | 0.9s | 5 reqs |

Iter 1: Add HTTP2, combine files ¶

http2 has many advantages over http1.1 (wikipedia.org), one of them being speed. Nginx supports this since version september 2015 or version 1.9.5 (nginx.com). Note that the current (January 2019) version of nginx on Debian stretch is 1.10.3, which is higher than 1.9.5 (I was briefly confused by the ‘1.1’ and ‘1.9’).

Following these instructions (digitalocean.com), I enabled this in /etc/nginx/sites-enabled/default:

server {

# SSL configuration

#

listen 443 ssl http2 default_server;

listen [::]:443 ssl http2 default_server;

}

Additionally, I reduced the number of data files (csv) requested from 16 to 4 by concatenating the data. Also, I reduced the dependency on external (css) files which were not that important.

Iter 2: gzip ¶

Even though a raspberry pi is not so fast, it’s still faster to gzip content before sending it. For this goal I added the following to /etc/nginx.conf:

http {

##

# Gzip Settings

##

gzip on;

gzip_disable "msie6";

gzip_vary on;

gzip_proxied any;

gzip_comp_level 6;

gzip_buffers 16 8k;

gzip_http_version 1.1;

gzip_types text/plain text/csv text/css application/json application/javascript text/xml application/xml application/xml+rss text/javascript;

}

Most of these might already be in your conf file, but commented out.

To properly gzip text/csv data I had to add this mime type to /etc/nginx/mime.types as instructed here (serverfault.com).

Iter 3: Compile javascript ¶

I’m using a 1.6MB javascript library which compresses well (because it’s text), but compiling / minifying it further allows for even better compression. To this end I used Google’s Closure Compiler (appspot.com) using only ‘simple’ optimisation. This reduces the js file size from 1532 kb to 220 kb. Compression will be impaired a bit, but that’s fine.

Iter 4: Add Cache-control ¶

Cache-control settings instruct browsers how often they should refresh their content, and thereby you can tune the requests based on the filetype. In my case, html and js files change rarely, but I have csv files that I update every 5 minutes. Some background info here (keycdn.com).

Note that this will not improve your performance the first load, but will subsequent requests.

I used settings found here (serverfault.com):

server {

# Cache control - cache regular files for 30d

location ~* \.(?:js|css|png|jpg|jpeg|gif|ico)$ {

expires 30d;

add_header Cache-Control "public, no-transform";

}

# Cache control - never cache csv files as they are updated continuously

location ~* \.(?:csv)$ {

expires 0;

}

}